This is an unsorted collection of graphics demos I wrote years ago. This was back when I first stumbled upon shaders. I think university courses took a back seat this year. The website I had hosted at the university since went down so I’m dumping some of the contents here.

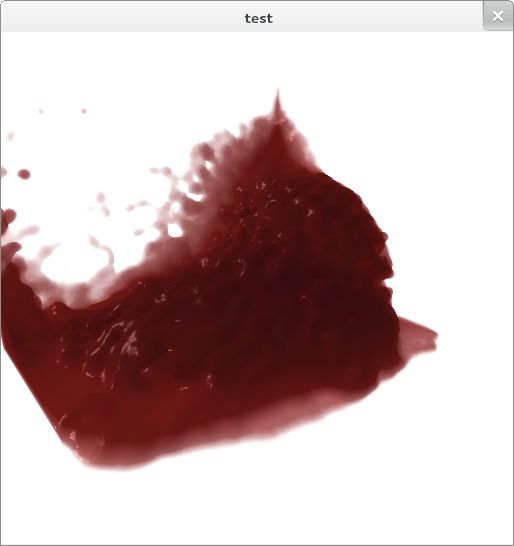

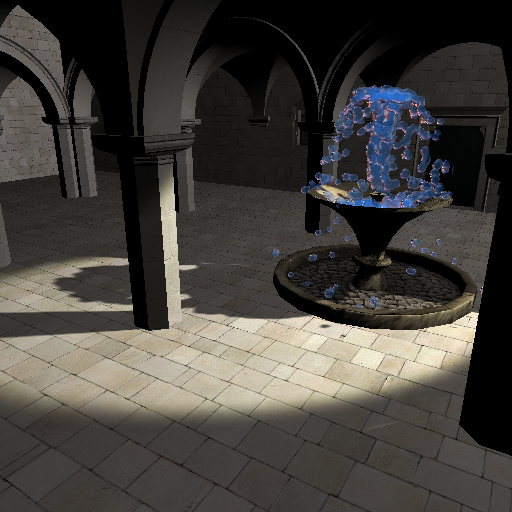

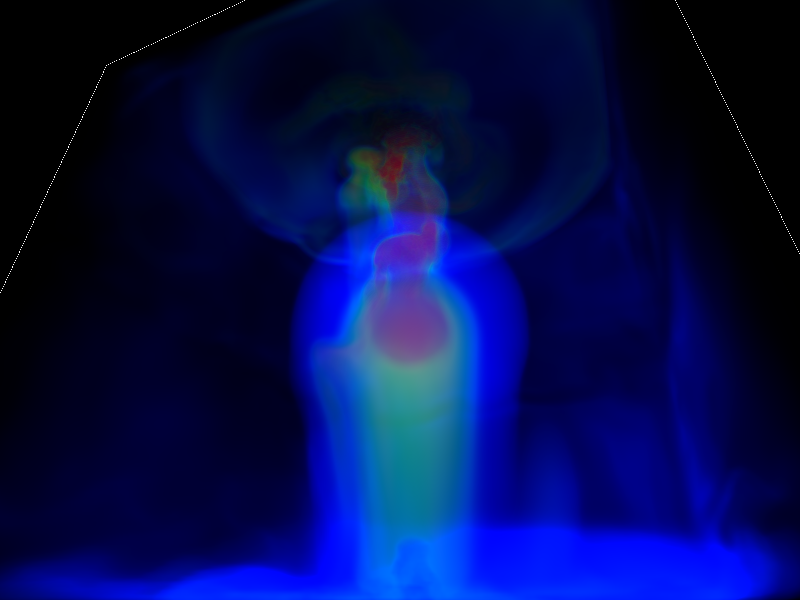

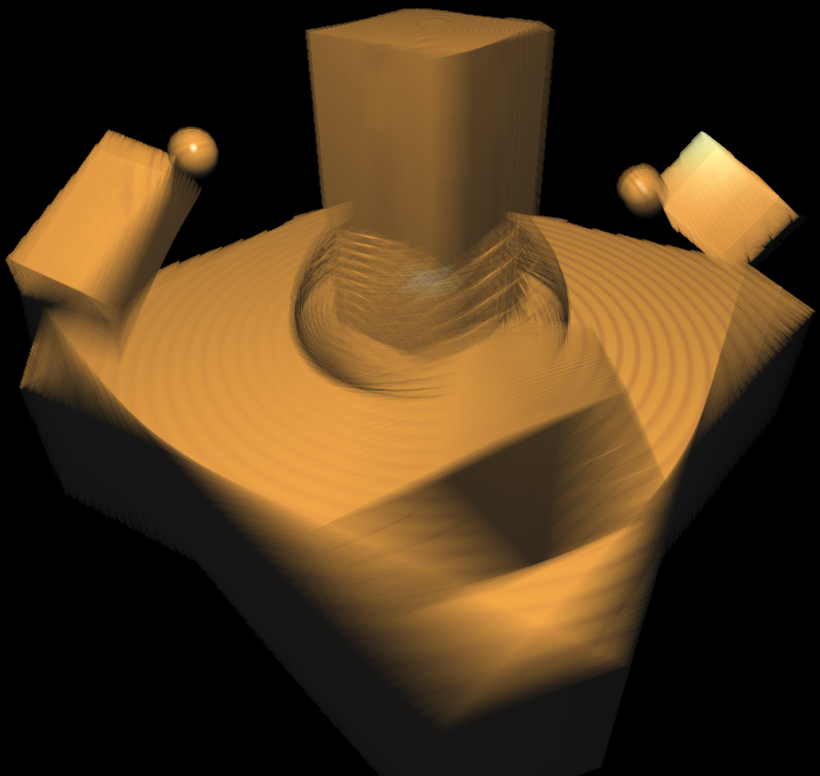

SPH fluid

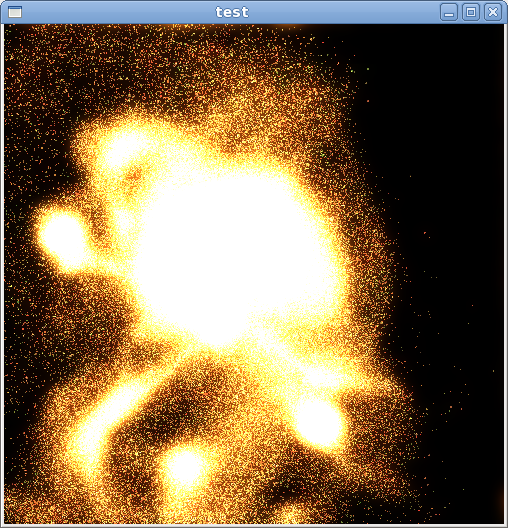

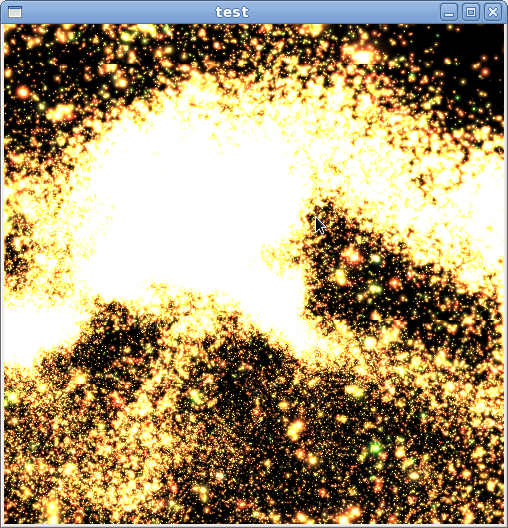

Computers were slower back then. These were all using uniform grid datastructures. The rendering was what I was really excited to get to, but of course I spent too much time tweaking simulation constants and trying to make the simulation feel bigger.

Some videos: https://www.youtube.com/playlist?list=PLjhj5hnWysbTUPbnlwbP1abLN0TQBws6w

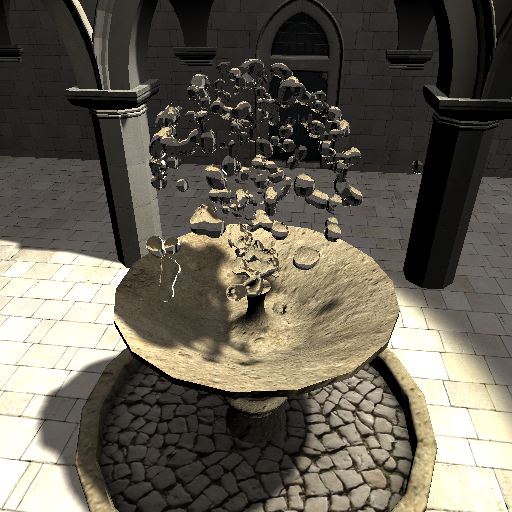

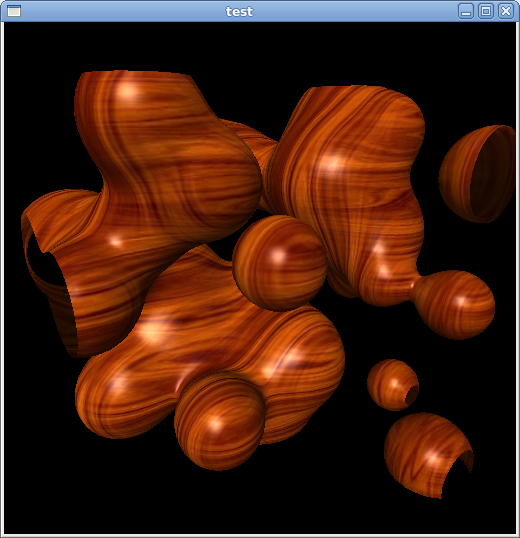

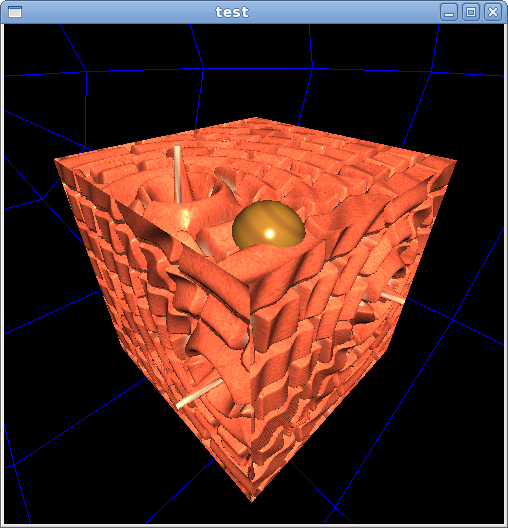

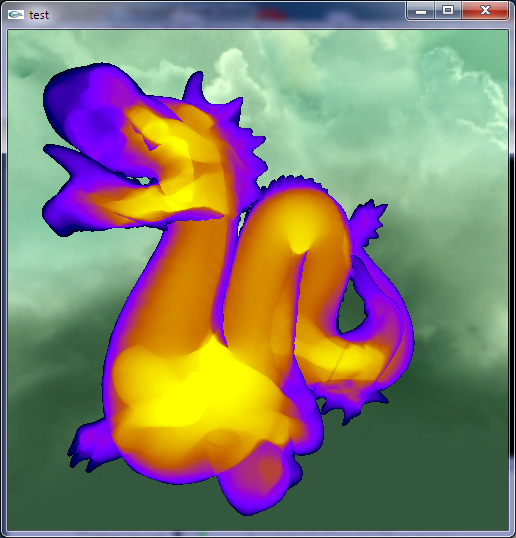

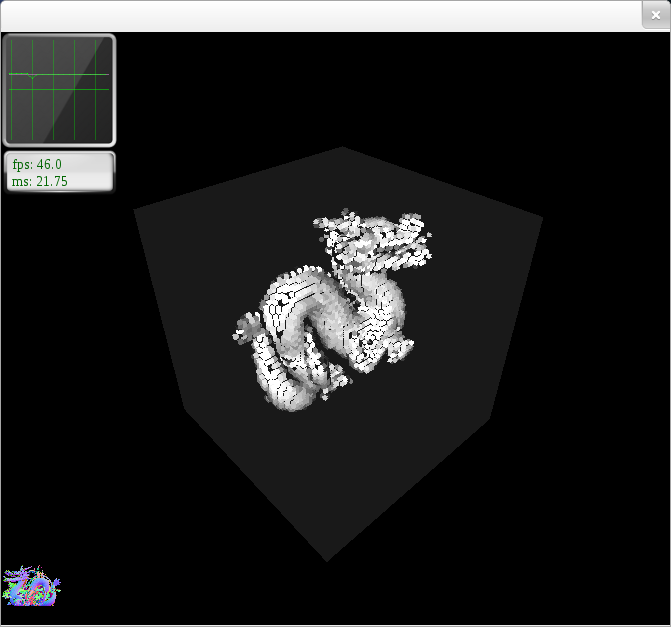

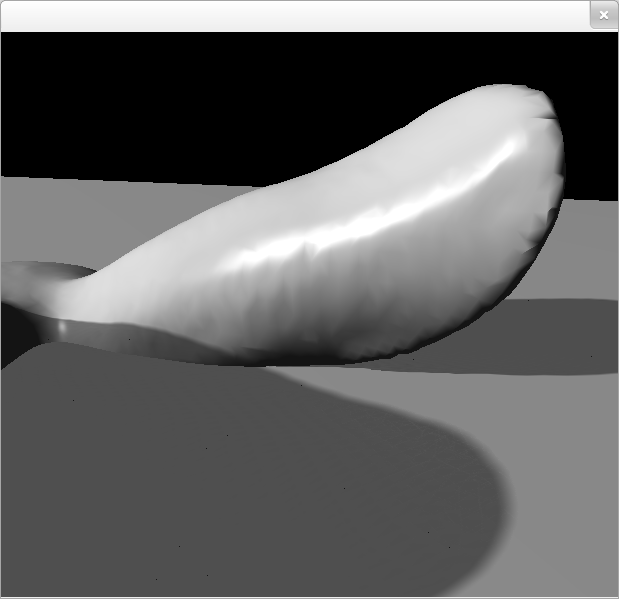

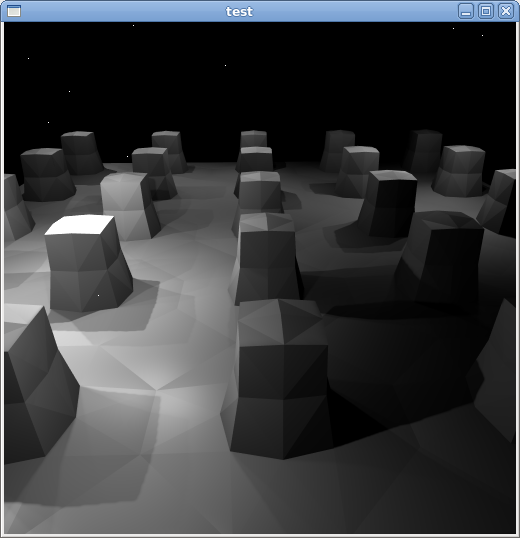

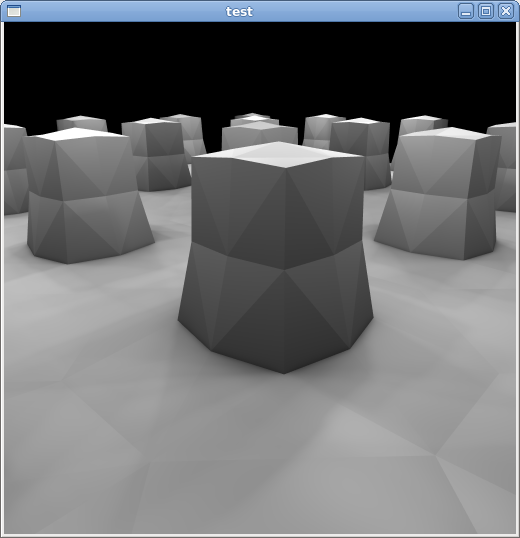

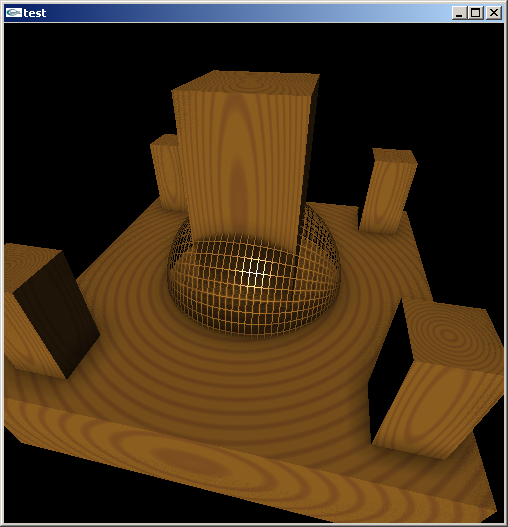

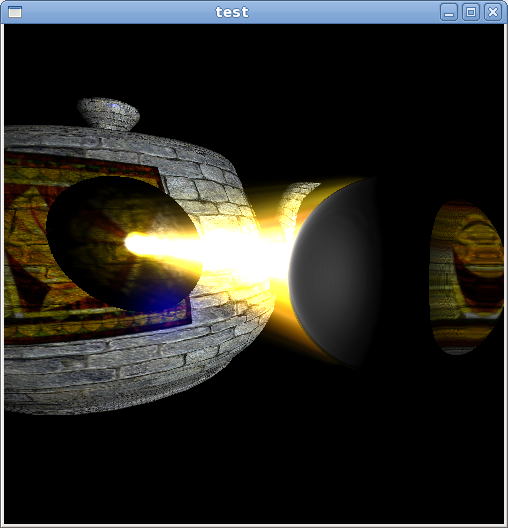

Metaballs

My first experience with geometry shaders and SSBOs (marching cube tables could not fit in constant memory at the time). It’s just rendering a 3D grid of points and the geometry shader expands that into triangles. I later used this technique for quick terrain chunk generation, using the transform feedback extension (see Atmosphere further down). The texture is planar mapped and blended based on the normal.

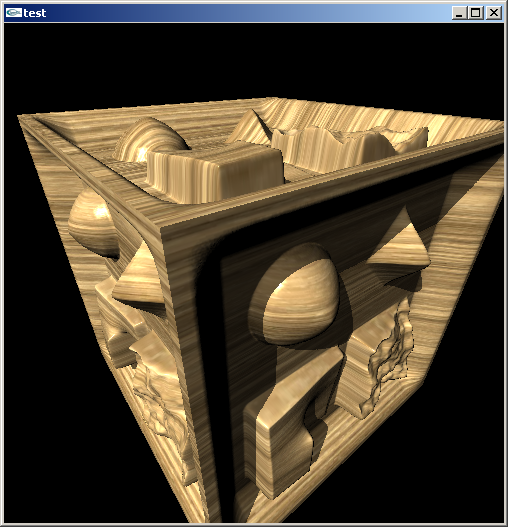

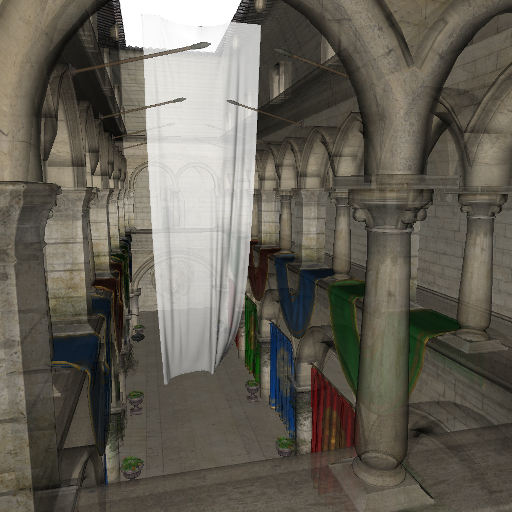

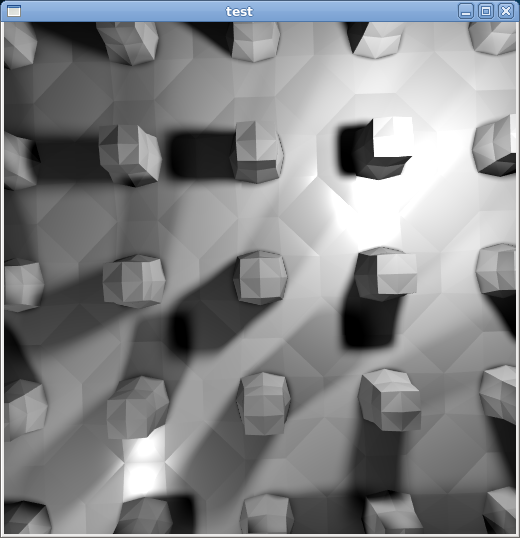

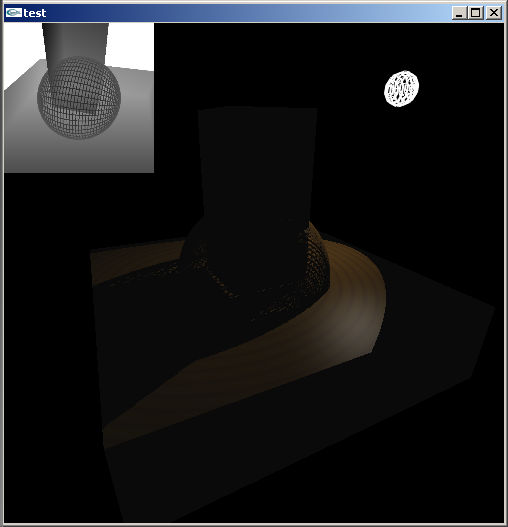

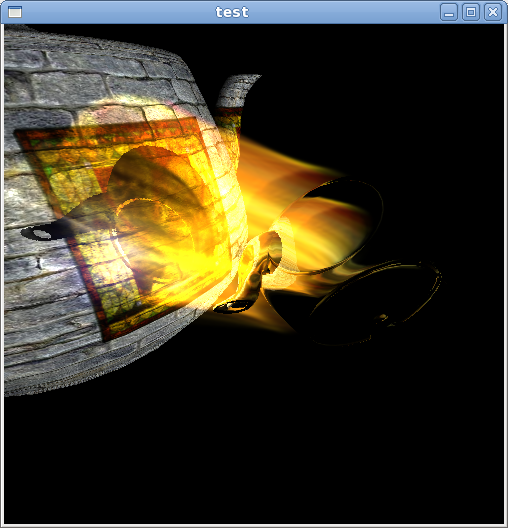

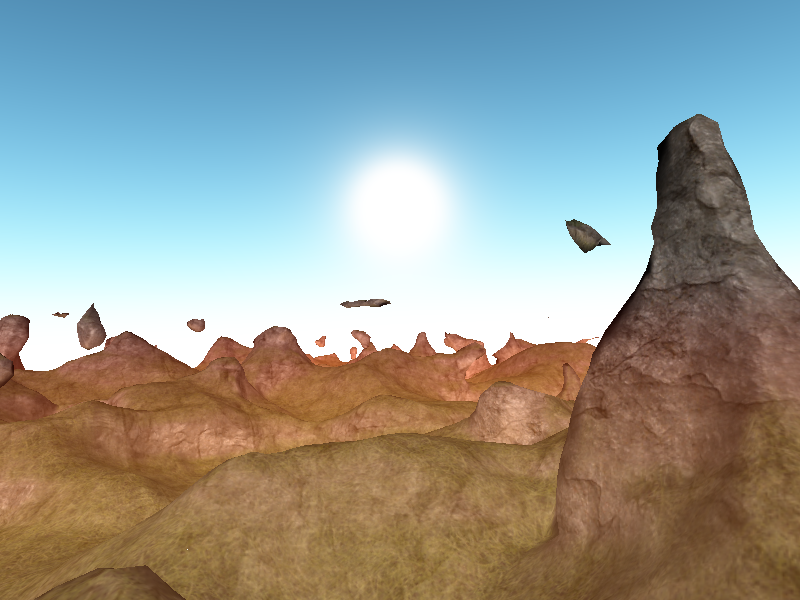

Parallax mapping

This is where it all started for me. Someone showed me the Oliveira and Policarpo papers and decided I wanted to implement it. It took a few of these other demos before I started to understand the maths but I got there in the end. It was the first taste of outside the box thinking that realtime rendering can be done a different way (ok, so my knowledge of graphics history was limited back then too, what with only knowing about the mainstream gpu + raster triangles).

The geometry in the first image comes entirely from this heightmap. Rendered directly.

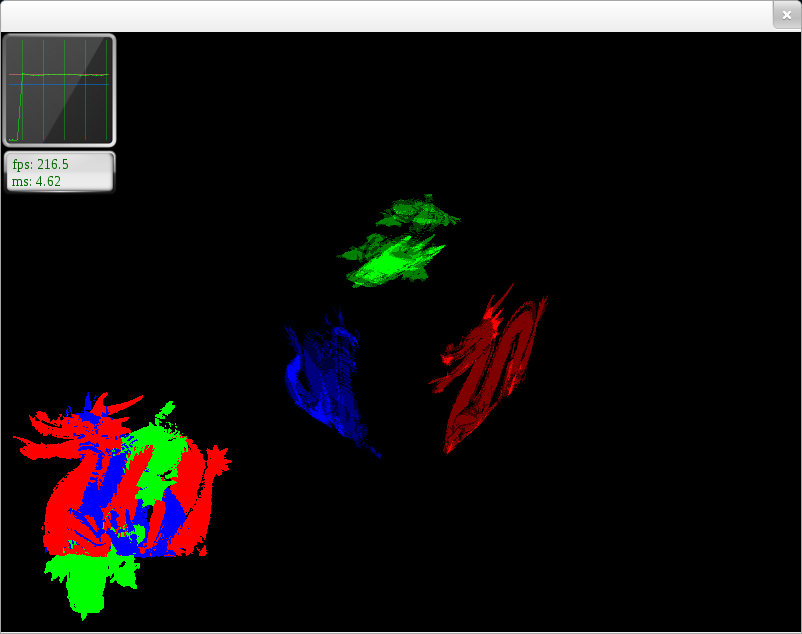

Particle systems

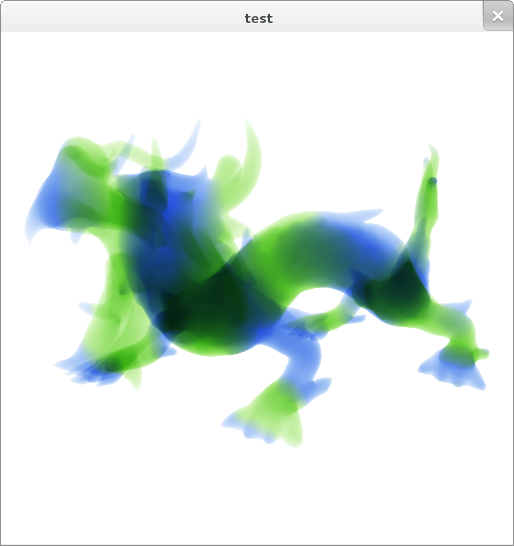

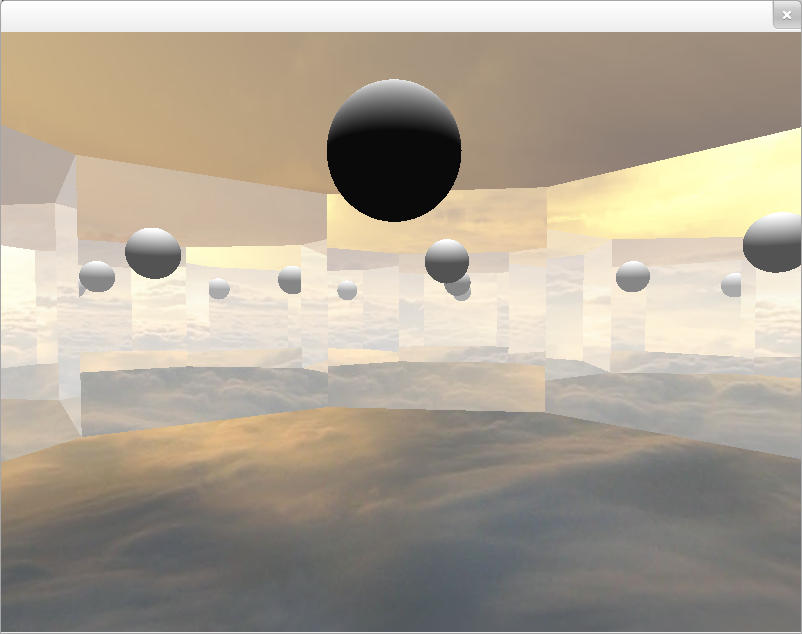

Transparency

Depth peeling involves rendering the geometry multiple times and capturing one layer of depth at a time. This false coloring shows the total depth.

I actually started out with order independent transparency. See the research section here. Although I did implement depth peeling as a comparison at one stage. It’s just so much faster when you have per-pixel atomics.

I tried re-rendering the object after capturing multiple layers from each X+Y+Z directions. I eventually came back to this idea in my phd thesis, but it’s a really tough problem to solve. At the time I’d seen the “true impostors” paper and really wanted to extend it for more complex kinds of geometry.

I also noted that the same data structure holds color from hidden surfaces. Perfect for depth of field. Simply drawing it as blurry transparent points sort of works, but “alpha” in alpha transparency is a statistical surface coverage and for a correct defocal blur you need to actually compute visibility. So that explains why you can see through objects here:

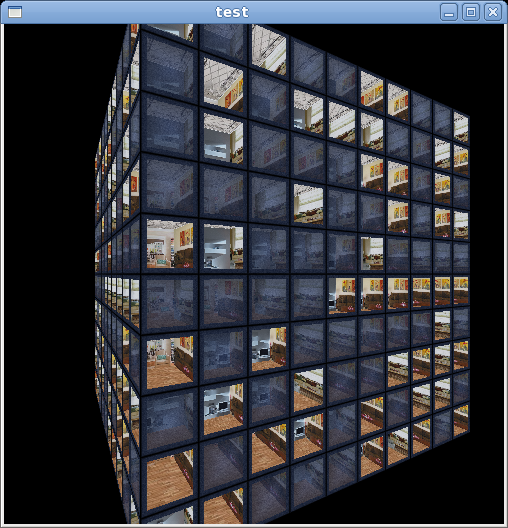

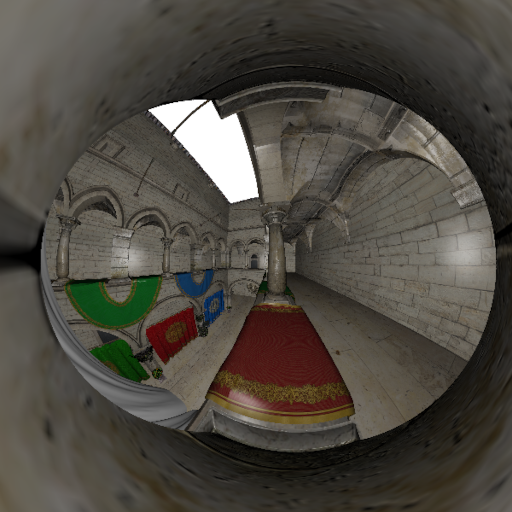

Interior mapping

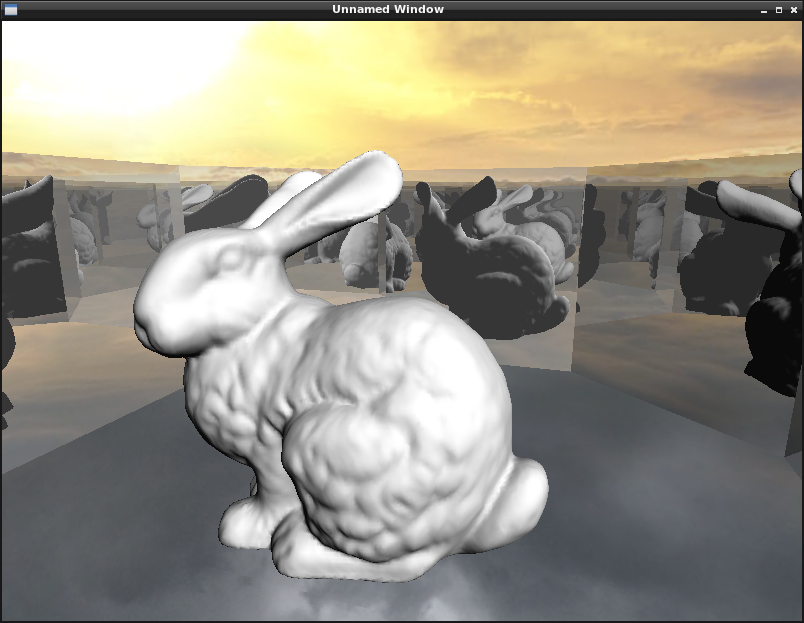

Portal rendering

OK, planar reflections, but it’s done with recursive matrix transforms and stenciling. All I need to do to have portals is adjust the matrix transform so it’s not a straight reflection. Each mirror and even mirror-within-mirror is rendering the whole bunny again. I do have clipping volumes I could be applying but the only thing taking advantage of it is the scissor rect.

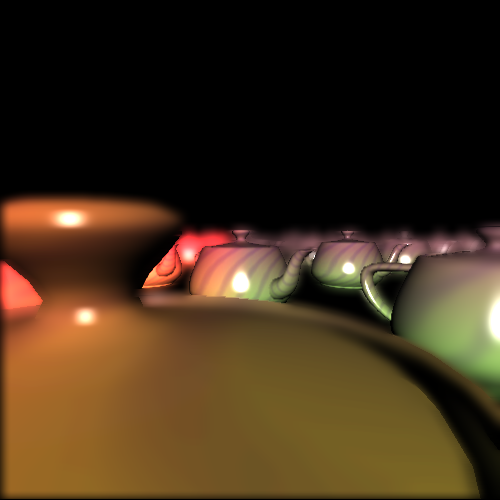

Deferred lighting

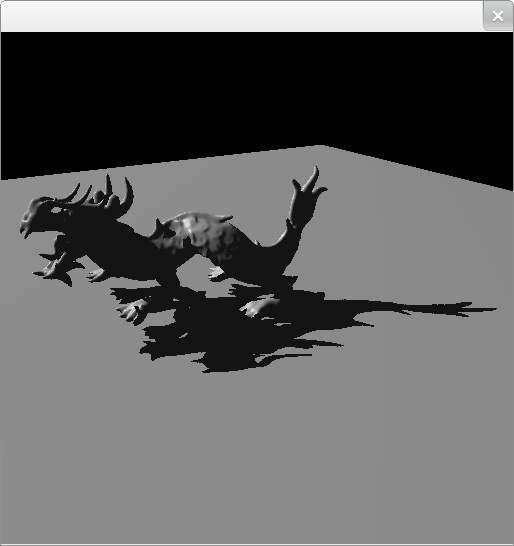

Irregular shadow maps

Perfect per-pixel hard shadows. Normal shadow mapping needs to pre-compute surface depth from the perspective of the light source. This is often inefficient as the frequency of the samples never perfectly matches what the camera is looking at. The irregular z-buffer stores arbitrary points in a uniform grid rather than a single depth per grid, like a depth buffer. Irregular shadow maps compute depth for each camera pixel rather than arbitrarily on the regular grid of a shadow map.

Irregular soft shadows

Taking this a step further I realised you could do it the other way around and store lists of triangles per-pixel. Then you could compute the overlap of the triangle and a spherical light source for soft shadows!!! It works well until you have multiple occluders (that’s why the screenshot is so simple).

Depth of field

Depth of field from a single image is hard to do well. Maybe these days with some deep learning it could go ok. To be physically accurate you need information from hidden surfaces, potentially full-screen circular blurs and HDR. This is a full 2D kernel blur based on a difference in depth. It was slow and of course something always looks wrong.

Grid based smoke

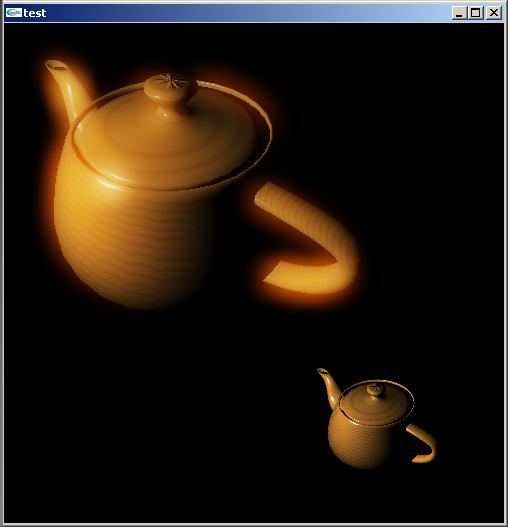

Variance shadow mapping

Variance shadow maps apply a blur to the shadow “strength”. It’s not really soft shadows as they don’t get softer with distance, but it avoids the square edges and sharpness from regular shadow maps. The ugly points are the light positions.

Also, this is fast! If you have enough lights, you don’t even need ambient occlusion :)

Not that it looks great, but this is my first ever shadow map.

Motion blur

Just camera motion..

Adding animated objects. You can see this is using the method of using the previous and current frames + velocity vectors.

This one was a CUDA implementation. IIRC I was going for a scatter blur rather than gather and in the early days of CUDA, that was the only thing that could do it.

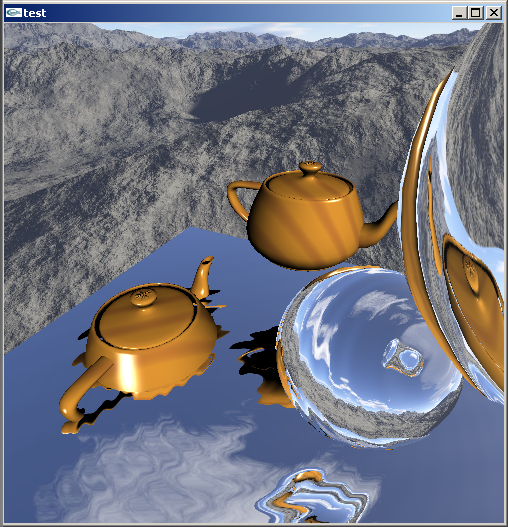

Reflections

Planar and non-planar, using a cube map.

HDR + Bloom

Ambient occlusion

Yes, screen space (SSAO).

Non-linear projections

Or rather, re-projecting a rectilinear rasterization.

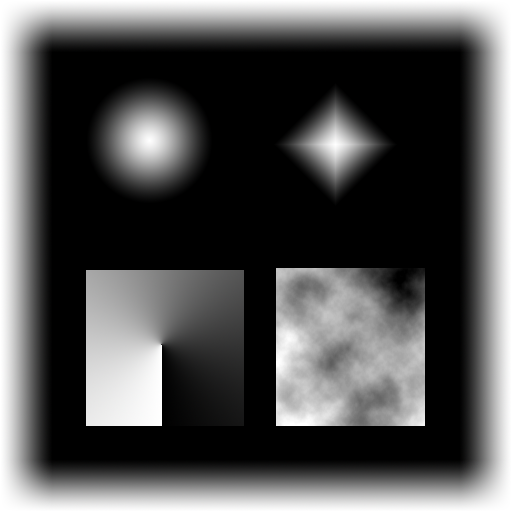

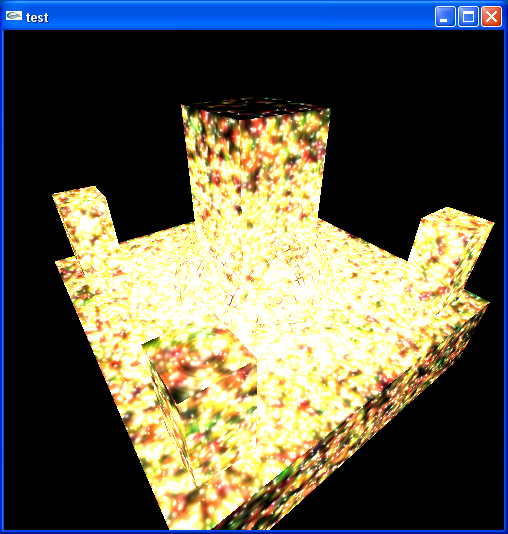

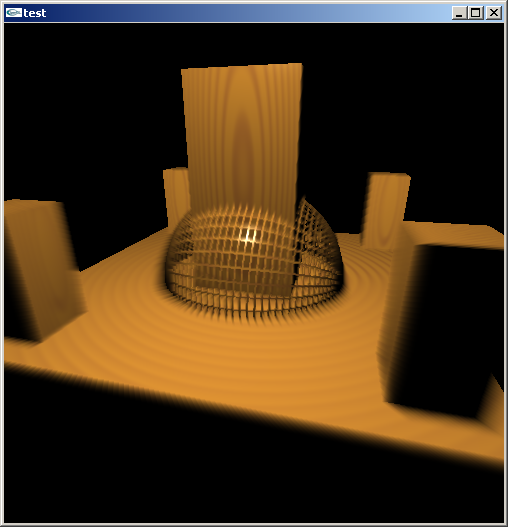

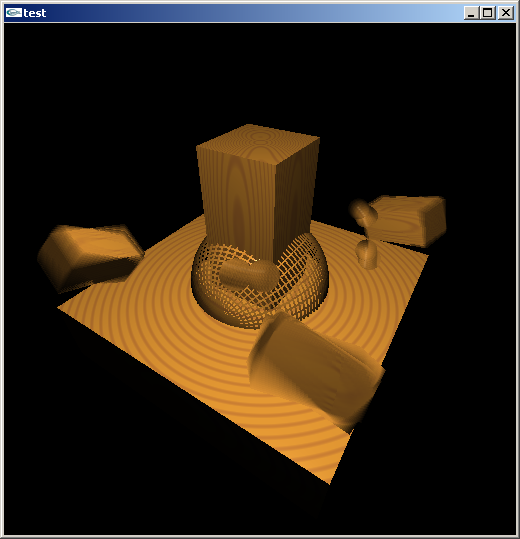

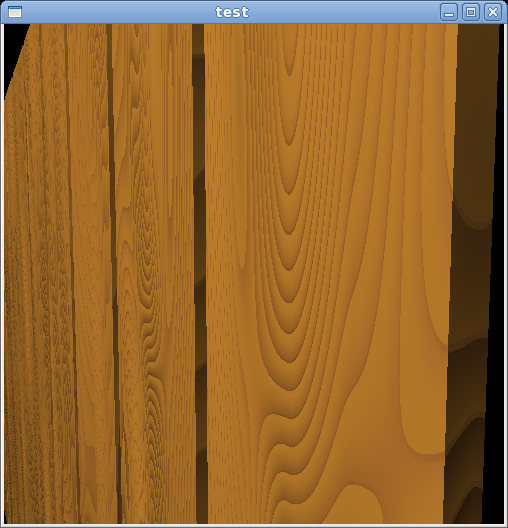

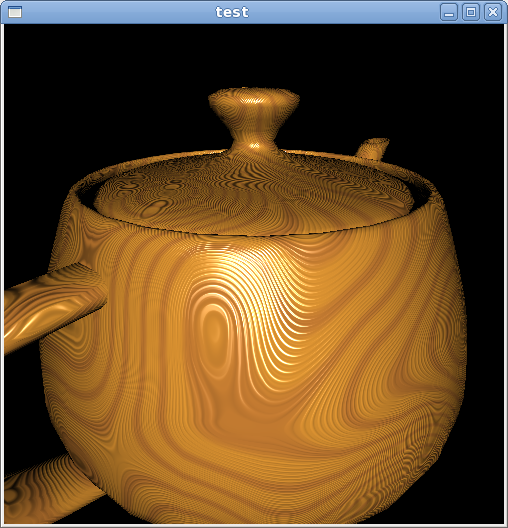

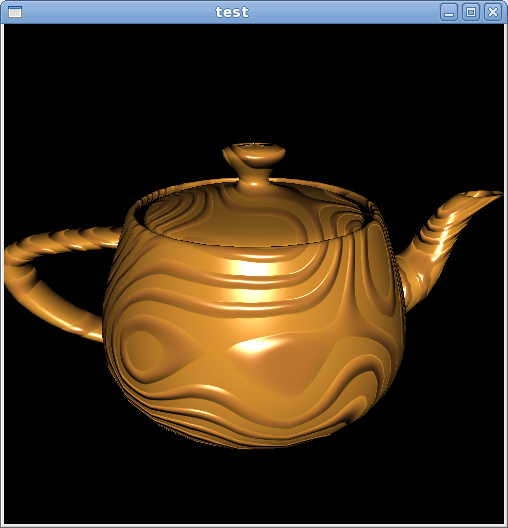

Procedural wood texture

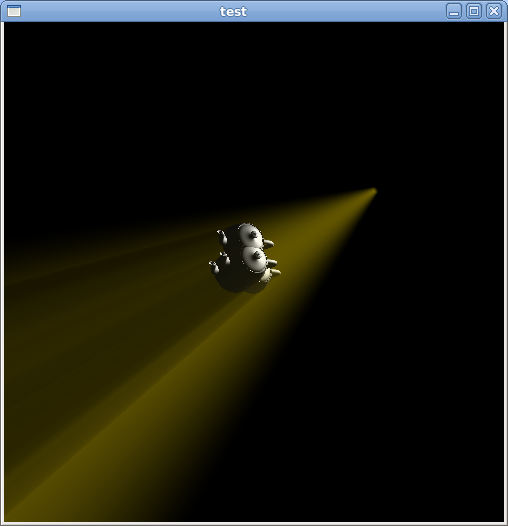

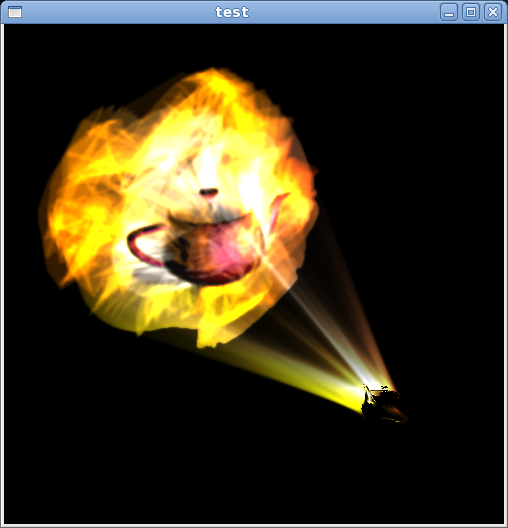

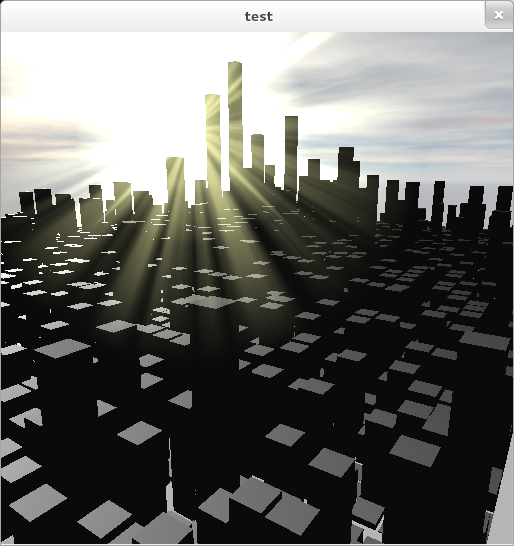

Volumetric lighting

I started with the cheap image-based one, which is more expensive than I’d have thought and doesn’t look as nice.

Finally, using a shadow map and marching through it.

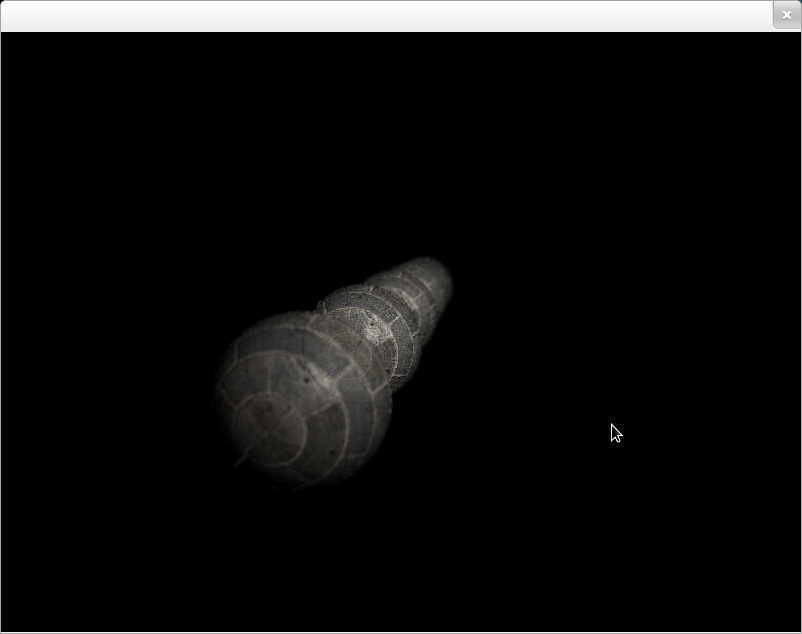

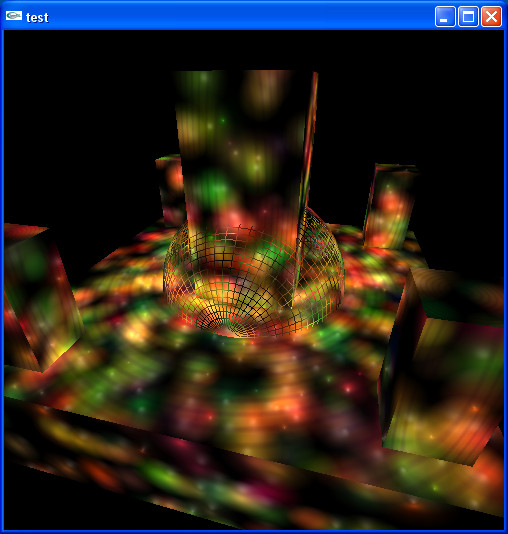

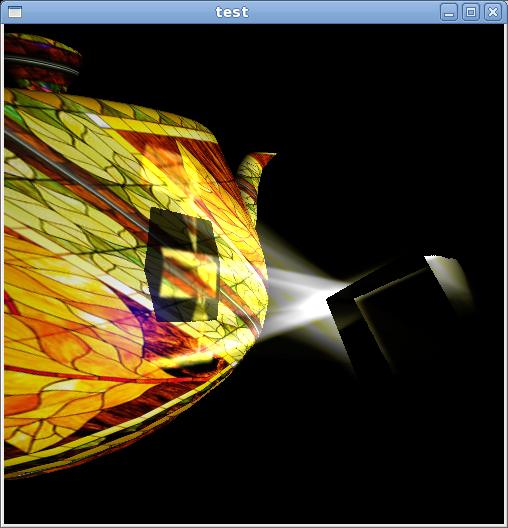

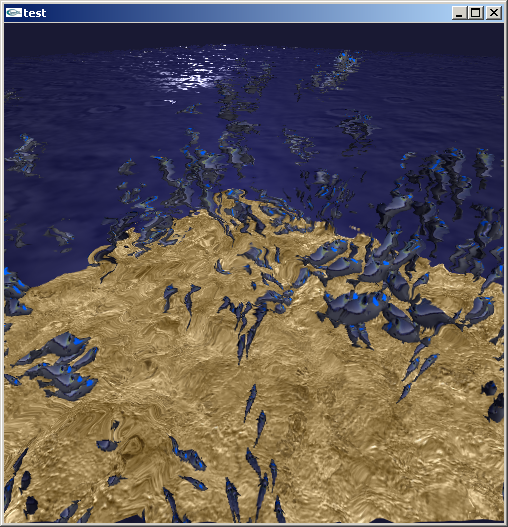

Caustics

So caustics were cool, but for some reason I jumped straight to volumetric caustics because it didn’t feel right to have one without the other. By far the best was drawing line primitives and blurring them. I would compute the refraction direction in a shader. Then probably ray marched a shadow map to find the end point and a place to splat some point lights for the surface caustics. Caustics are in the Boids demo too.

I also tried splatting polygons instead of points, but that was looking pretty messy.

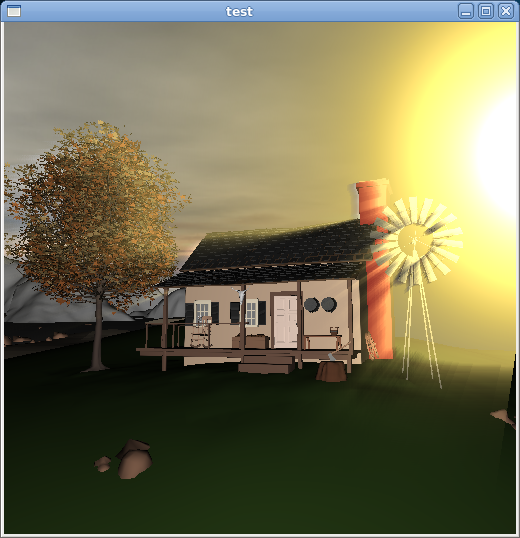

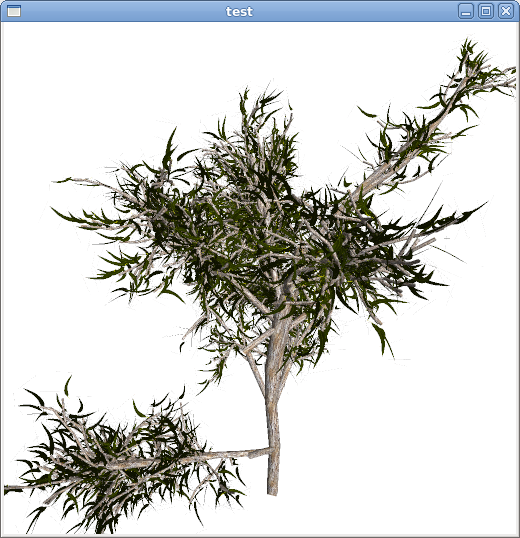

Animated procedural tree

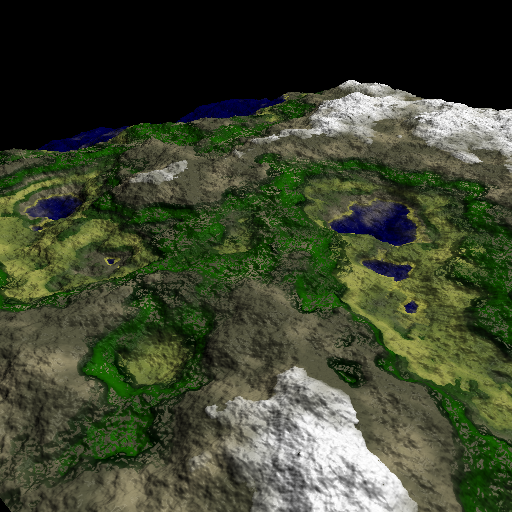

Procedural terrain texturing

Inspired by terragen.

Lens flare

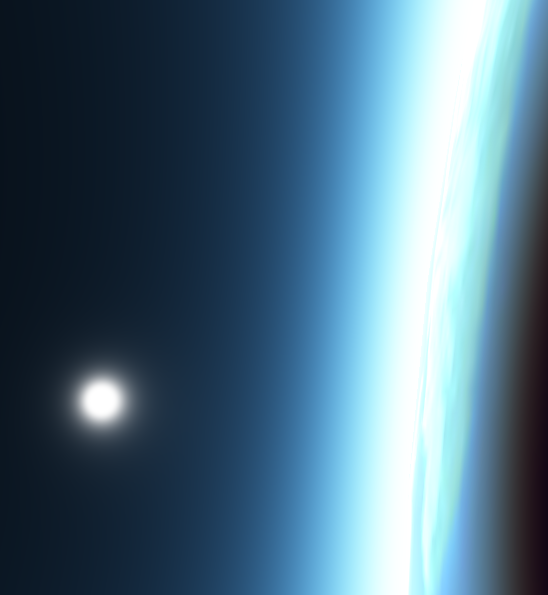

Atmosphere

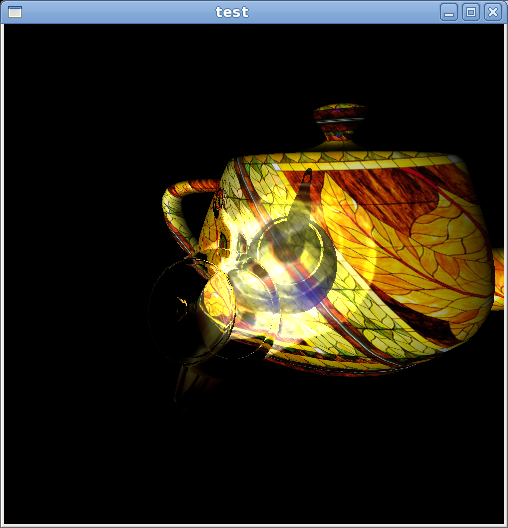

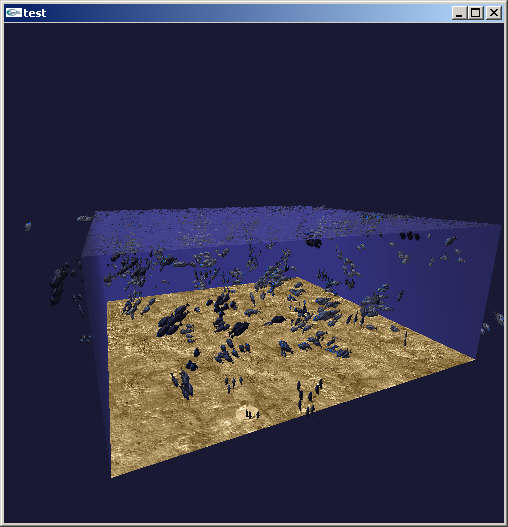

Boids

Also includes some refraction and caustics.

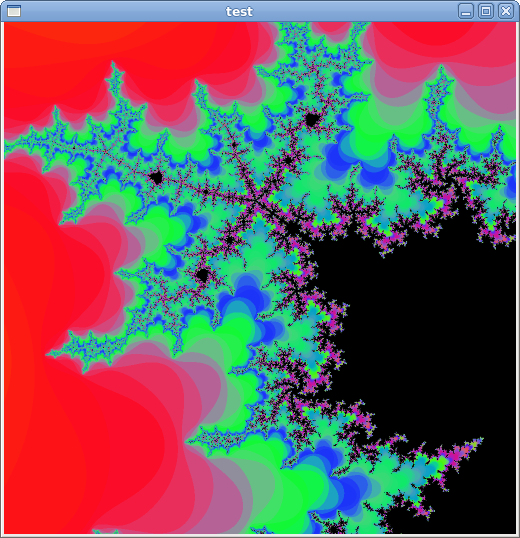

Mandlebrot shader